Projects Introduction

This projects addresses the challenge of scarce annotations using deep learning and active learning (AL) techniques to reduce annotation costs and efforts while maintaining high segmentation accuracy and improving clinical workflow efficiency.

This work presents several AL methods contributing to cost-effective medical image annotation, including ensemble-based AL, semi-supervised AL (SSAL), and clustering-based diversity sampling.

The outcome of these studies led to the creation of a biobank of echocardiographic images, ranking samples by diversity so that clinicians label only the most informative data—maximising AI model improvement with minimal manual effort.

Dataset

Fig. 1 A sample image from the Unity dataset.

Three echocardiographic datasets were used for experimentation: CAMUS, Unity, and Consensus.

-

CAMUS dataset:

Public, fully annotated dataset for 2D echocardiographic assessment.

Details: CAMUS Challenge -

Unity dataset:

Private dataset of 1,224 apical four-chamber echocardiogram videos from Imperial College Healthcare NHS Trust’s database.

Ethically approved (IRAS ID 243023). Contains 2,800 labelled images (70% train, 15% validation, 15% test). -

Consensus dataset:

Testing dataset of 100 A4C videos (200 ED and ES frames) labelled by 11 experts.

The mean annotation served as the ground truth for robust benchmarking.

Network Architecture

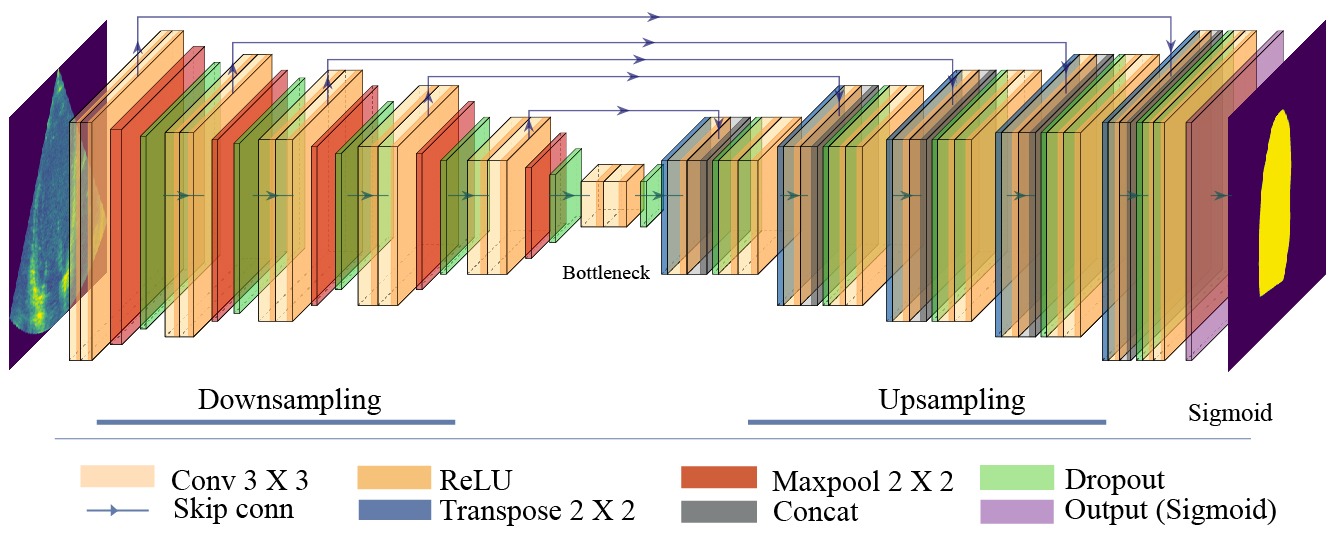

Fig. 2 The customised Bayesian U-Net architecture.

The Monte Carlo Dropout (MCD) U-Net with a depth of 5 was used for Bayesian active learning in LV segmentation tasks.

Dropout layers enabled uncertainty estimation.

TensorFlow was used for implementation on an Nvidia RTX3090 GPU.

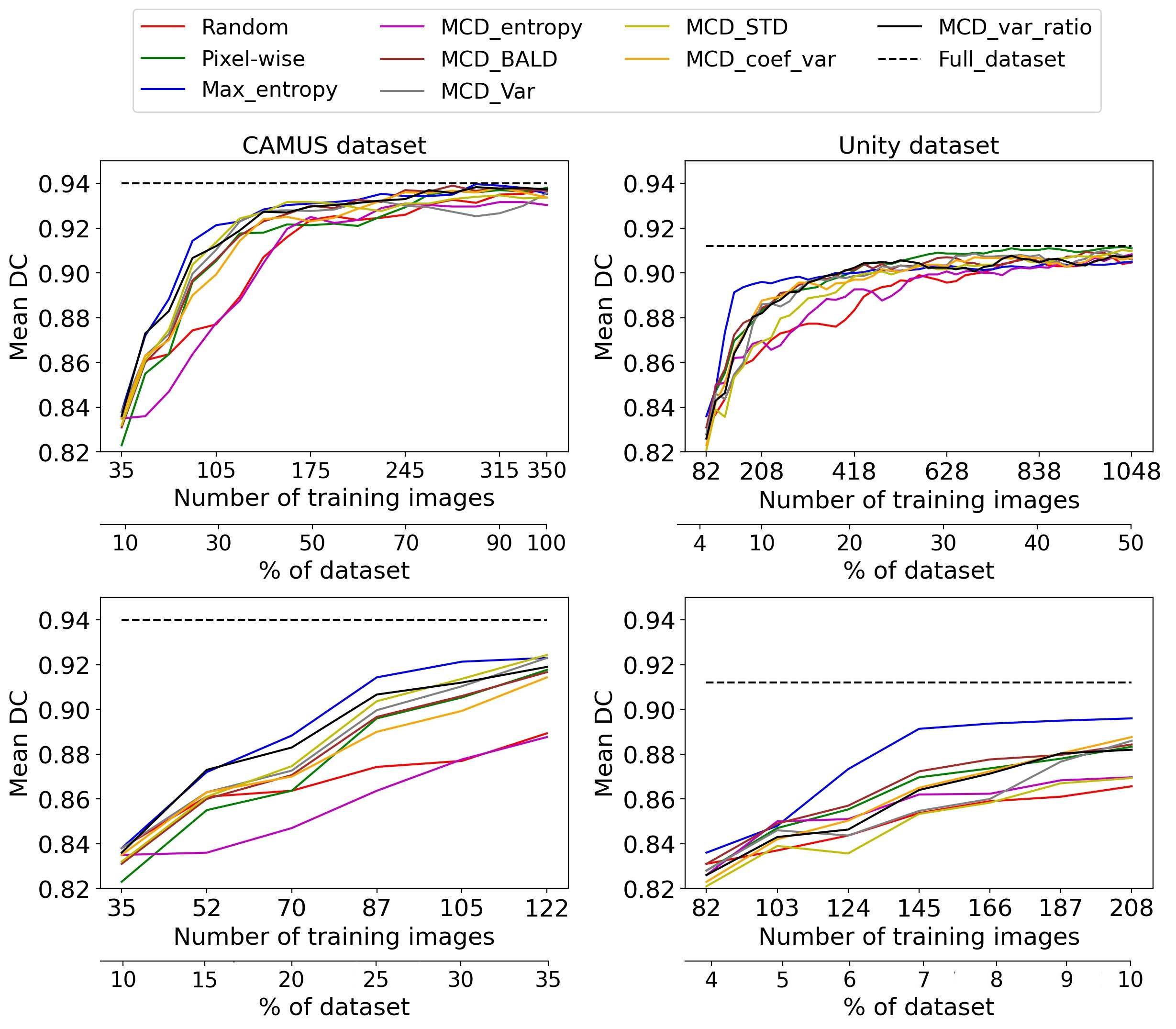

Study 1: Active Learning for Left Ventricle Segmentation

This study evaluated existing AL strategies (uncertainty and representativeness-based methods), establishing a strong baseline for further experiments.

Datasets: CAMUS and Unity

Framework: Pool-based AL with iterative model retraining.

Uncertainty Methods: Pixel-wise, Max Entropy, MCD Ensemble (Entropy, Variance, BALD)

Implementation:

- Framework: TensorFlow + Keras

- Loss: Binary Cross-Entropy

- Optimiser: Adam (lr = 0.0001)

- Epochs: 200 (early stopping, patience=10)

- Batch size: 8

Results:

Max Entropy achieved 97.7% of full dataset performance on CAMUS using 25% of data, and ~99% on Unity using 20% of labels.

Fig. 3 Active learning performance on CAMUS and Unity datasets.

Fig. 3 Active learning performance on CAMUS and Unity datasets.

More details: ScienceDirect Paper

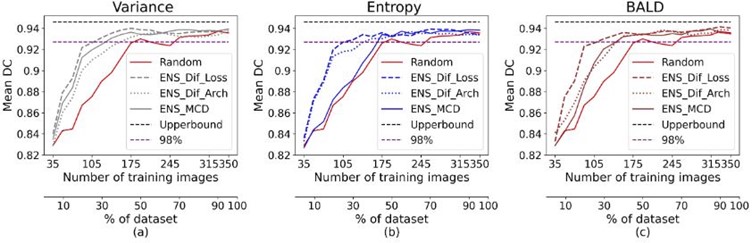

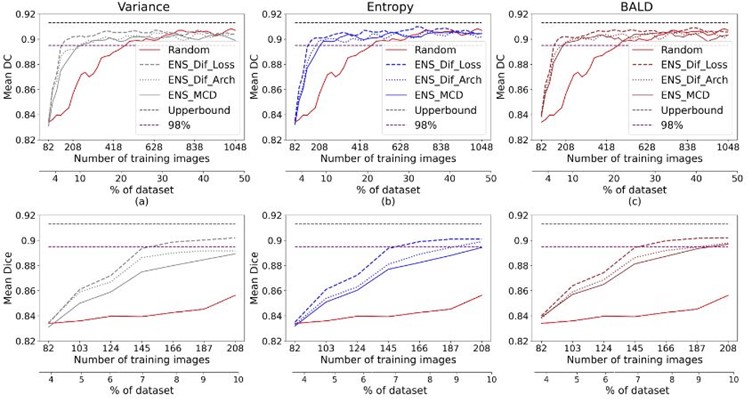

Study 2: Ensemble-Based Active Learning

This study explored ensemble-based AL using different loss functions and architectures.

Approaches:

- MCD Ensemble

- Multi-Architecture Ensemble (U-Net, ResUNet, ResUNet++)

- Multi-Loss Ensemble (BCE, Dice, BCE+Dice)

Results:

- Multi-loss ensemble achieved >98% of full performance with <30% data on CAMUS.

- On Unity, 98% performance was reached with only 7% labelled samples.

Ensemble-based AL results on CAMUS dataset.

Ensemble-based AL results on CAMUS dataset.

Ensemble-based AL results on Unity dataset.

Ensemble-based AL results on Unity dataset.

More details: Frontiers in Physiology, 2023

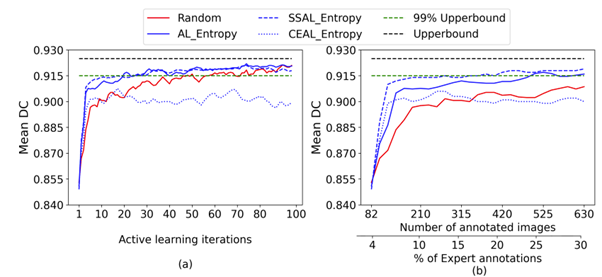

Study 3: Semi-Supervised Active Learning (SSAL)

This study proposes a semi-supervised AL framework to reduce manual annotation requirements by leveraging reliable pseudo-labels.

Key Idea:

Select pseudo-labels from mid-range uncertainty images, validate reliability via threshold variance, and combine with expert annotations for retraining.

Results:

Achieved 99% accuracy using only 7% labelled data — reducing annotation effort by up to 93%.

Fig. 4 SSAL results showing efficient annotation performance.

Fig. 4 SSAL results showing efficient annotation performance.

More details: OpenReview Paper

Study 4: Diversity Sampling using Clustering-Based AL

Introduced an optimised clustering-based AL method using Fuzzy C-Means (FCM) to improve diversity sampling compared to Greedy K-Center and random sampling.

Highlights:

- Used PCA for feature-space dimensionality reduction.

- Combines diversity and uncertainty for optimal image selection.

- Results pending publication.

Future Work

Future research directions include:

- Integrating AL with Semi-/Self-Supervised Learning for low-label settings

- Developing verification methods for pseudo-label quality

- Tackling noisy labels via robust AL-integrated denoising