The images used in this dataset were sourced from the Imperial College Healthcare NHS Trust, comprises 100 apical four-chamber (A4C) echocardiography videos collected in 2019. This dataset features a closely balanced gender distribution, with 47% male and 53% female participants, and a median age of 60 years (interquartile range: 48.5–73.0). For each video, one end-diastolic (ED) frame and one end-systolic (ES) frame were extracted, resulting in a total of 200 images. These images were independently annotated by 11 experts, who were unaware of each other’s annotations. This dataset serves as an independent external test for evaluation purposes.

This unique dataset is publicly released to promote further research in the field and to encourage the validation and benchmarking of deep learning models using multi-expert labelled data. Our goal is to enhance model reliability and generalizability. By offering this resource, we aim to support more rigorous evaluations, enabling researchers to test and refine their models in diverse, complex scenarios. Ultimately, this will help improve the performance and broader application of deep learning technologies in medical echo imaging.

The echocardiograms were acquired during examinations performed by experienced echocardiographers, following standard protocols. Ethical approval was obtained from the Health Regulatory Agency for the anonymised export of large quantities of imaging data. As the data was originally acquired for clinical purposes, individual patient consent was not required.

The dataset (images and labels) is available under the Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International license. The release of the associated dataset received a Favourable Opinion from the South Central − Oxford C Research Ethics Committee (Integrated Research Application System identifier 279328, 20/SC/0386).

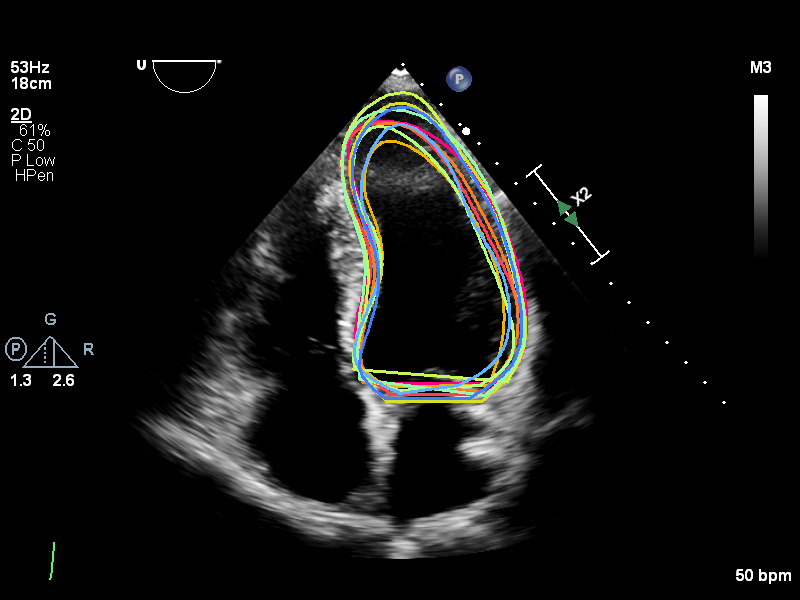

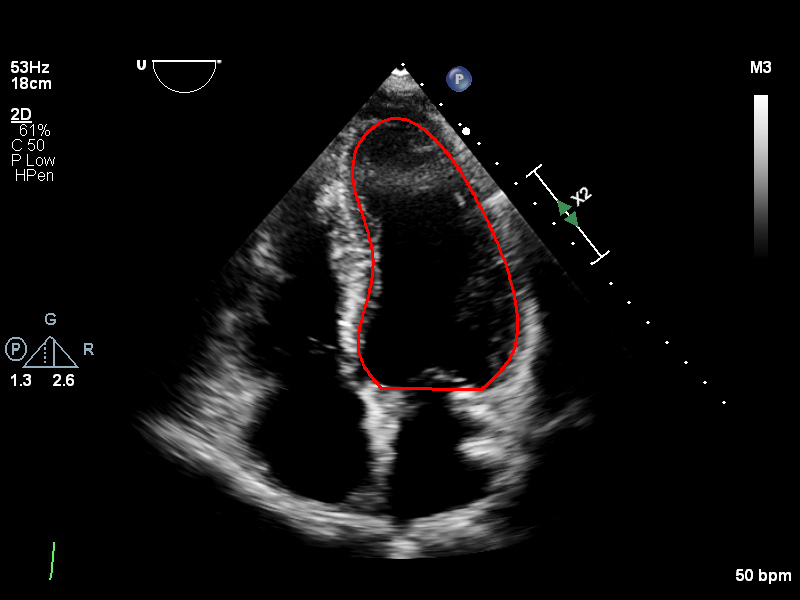

The image on the left illustrates an example of the multi-expert annotations within this dataset, while the corresponding consensus curve is shown on the right.

The dataset package available for download includes the following:

UnityLV_multiX_annotations.json

The file contains annotations for an external test dataset comprising 100 studies. Each study consists of end-diastolic (ED) and end-systolic (ES) frames, annotated by 11 experts.

Each study includes two frames: the ED frame appears first, followed by the ES frame.

Example:

02-008cb0fa74df88e44971367a2291e4a2969741e21bafbeffdb27a0b0b72e29d3-0003.png

02-008cb0fa74df88e44971367a2291e4a2969741e21bafbeffdb27a0b0b72e29d3-0041.png

In this example, 02-008cb0fa... represents the unique identifier for the study (video), while 0003 and 0041 are the frame numbers corresponding to the ED and ES phases, respectively.

This JSON file contains ([X],[Y]) coordinate tuples for multiple experts. The JSON is organised by image name corresponding to the Images folder. For each image, it has coordinates for each anonymized userId.

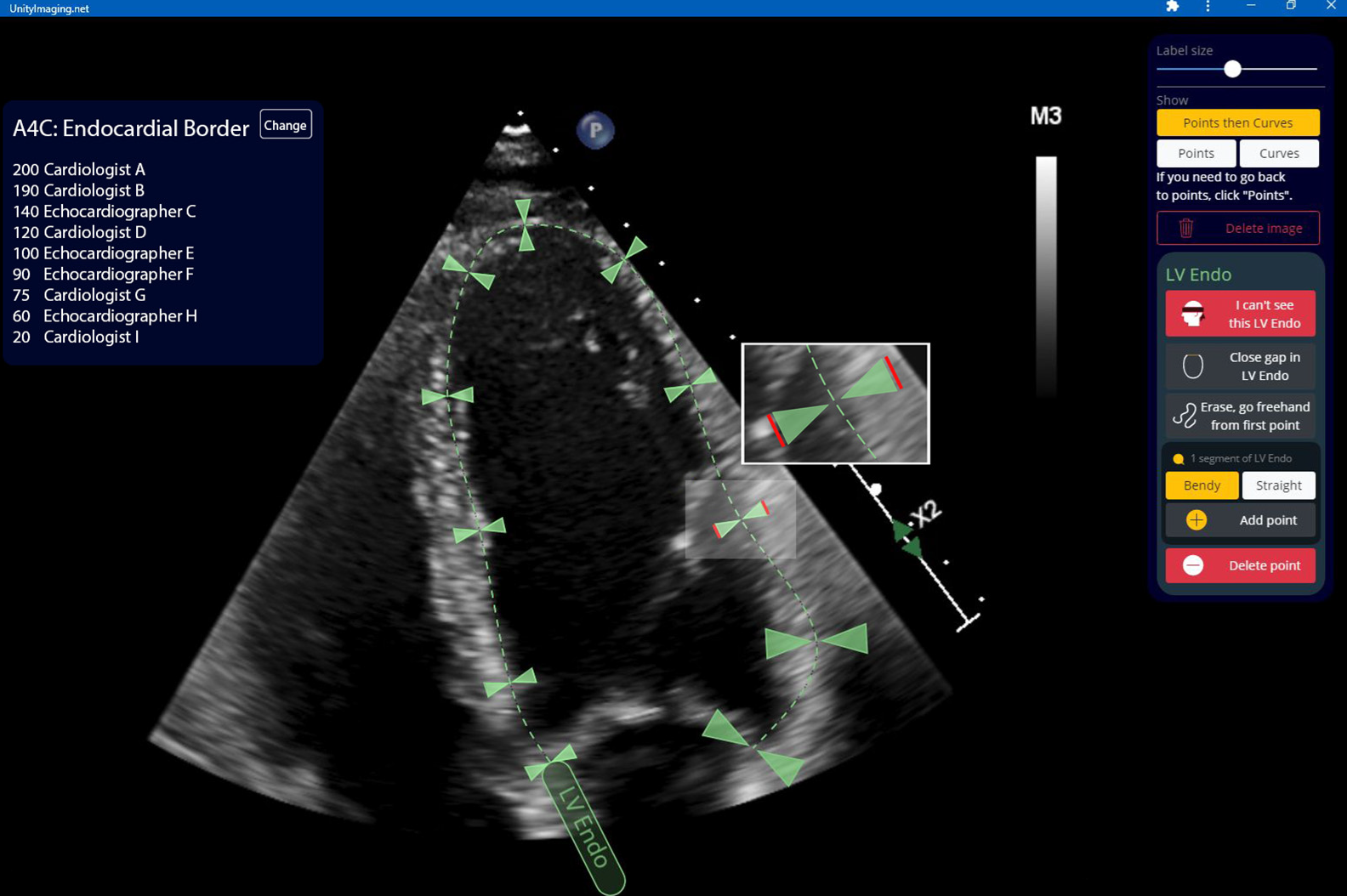

The annotation of this dataset was facilitated using https://data.unityimaging.net.

The Unity web-based, interactive, real-time annotation platform enables clinical experts across the UK to collaboratively and efficiently label medical images. The platform’s intuitive interface allows experts to annotate key anatomical structures with precision, including critical features such as key points and curves along the endocardial border, as illustrated above. The platform’s accessibility and real-time capabilities facilitate large-scale multi-expert annotations, enhancing the quality and accuracy of labelled datasets for medical image analysis.

If you wish to request access, please complete the form below: